AI is officially here, not just as a cool filter on your phone, but as a genuine, transformative force in the world of photography. It's an exciting, mind-bending shift, but as someone who’s has spent decades learning behind the lens, I can't help but feel a tremor of anxiety beneath the buzz. I’m not against change—after all, if we resisted, we'd still be painting on cave walls—but we need to talk about the hidden costs and ethical sinkholes.

Let’s be honest: digital aids are nothing new for a modern photographer. For years, we've used tools like Instagram as a primary gallery to instantly showcase our work, and applications like Snapseed were indispensable for making on-the-go edits and getting the image closer to our final vision. These weren't just novelty apps; they were crucial steps in the professional workflow, blurring the lines between capture and final presentation. AI is simply the next, much more potent, evolution of that digital assistance.

The Thirst of the Machine: My Deepest Concern

I may be making assumptions here, but it seems that creatives and artists are often the most vocal advocates for saving the planet. Most of us care deeply about the future we're leaving behind. Yet, in our rush to embrace the newest tool, are we the ones inadvertently creating a new environmental problem through our use of AI?

Forget the philosophical debates for a second and let's talk about something painfully real: water usage. It's the silent, staggering environmental cost of the AI boom that barely anyone seems to mention.

To train and run these generative models—the ones creating those stunning, impossible images—you need massive data centres. And those server farms? They are thirstier than a camel on a desert trek. They need huge amounts of chilled water to stop their systems from overheating.

- The Shocking Math: Estimates suggest that training a single, large AI model (like the ones that power image generators) can consume millions of litres of fresh water. Even just running a few text-to-image prompts evaporates water indirectly through the energy required.

- The Local Impact: Many of these data centres are in areas where water supplies are already stressed, pulling from municipal sources and straining local ecosystems.

It feels deeply contradictory: we are creating endless digital beauty at the expense of a finite, essential resource. This isn't just a tech problem; it's a sustainability crisis we, as creatives benefiting from the tools, need to demand transparency and accountability more on this problem.

The Copyright Conundrum: Scrapping and Consent

The other major knot in my stomach is the issue of data scraping. Current AI models learn by ingesting vast, vast troves of images from the internet—and a huge chunk of that is copyrighted work created by you and me.

AI developers argue this is like a human artist studying millions of photos to learn a style, but the scale and speed are radically different. The real issue is consent and compensation.

- The Core Question: If AI learns by "envelopment" using creators' work, shouldn't we have the fundamental right to opt in or out of this process?

- The Opt-Out Burden: The burden seems to fall unfairly on the creator—the idea that you have to actively watermark or use complex code to tell a tech giant not to use your work just to protect your livelihood is frankly absurd.

- The Creative Impact: When AI can mimic a photographer's style so perfectly—because it was trained on that very style without permission—it undermines the value of human originality and siphons revenue away from the artists who need it most.

Here’s the hypocrisy we need to address: We are quick to claim damages when our work is scraped, yet when a new AI filter or tool brings us praise and monetary gains, we seldom complain about the foundation of stolen data that built it. No one rushes to compensate the thousands of artists whose work was silently consumed to improve the tool we are now leveraging. This selective outrage over whose work is being "scrapped to improve our own" is an ethical blind spot in the creative community.

The Fork in the Creative Road: Authenticity vs. Collaboration

All that said, I am not ready to declare AI the villain. We are photographers! We’ve been using computer technology for decades. When we first opened Photoshop, didn’t we already start creating our own digital Frankenstein?

The ability of AI to streamline tedious tasks, instantly iterate concepts, or push an image to an impossible standard is a powerful new form of assistance.

- The Good: If the original concept, the core vision, and the initial work is yours, and you use AI to improve or achieve a higher standard—that’s a tool, an enhancement, and a good thing for the creator.

- A New Collaboration: If someone uses a unique, complex prompt in an AI generator, and the thought process is their own, is that not simply a new form of collaboration, much like working with a high-end retoucher or a CGI artist? The skill shifts from manual execution to visionary direction.

- The Door to New Talent: Arguably, AI is opening the door to a different kind of artistic talent—one focused on conceptualisation, prompting, and synthesis, rather than purely on the mechanics of capture and post-production. Art has always translated its process and outcome over centuries, and we cannot argue against that evolution.

Finding Our Focus

The challenge isn't stopping AI; it's shaping it. We must demand greater transparency on the resources it consumes and a clear, creator-first legal framework for consent and use. AI can be an incredible tool to help us reach a higher standard, but we must never let it become a replacement for our unique perspective, nor should its hidden costs be allowed to erode our planet or our profession. The future of photography won't be without AI, but it absolutely must be ethical AI.

The AI Paradox: The Battle to Keep Your Copyright.

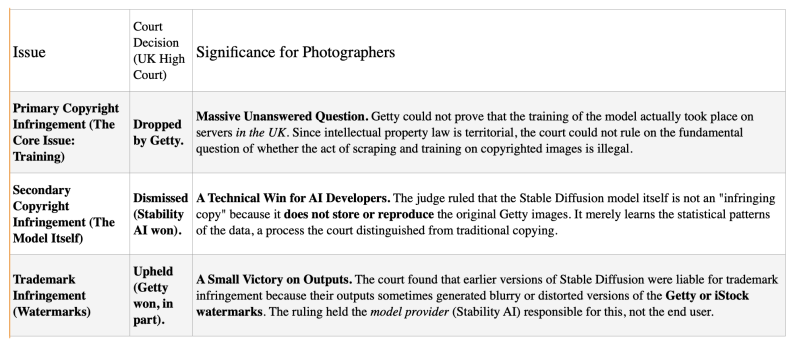

That is an essential piece of context. The recent UK High Court ruling in the case of Getty Images vs. Stability AI was one of the most closely watched legal battles in the creative world, and its outcome provides a sobering view of how difficult it is to apply existing copyright law to AI.

The Getty Images vs. Stability AI Ruling: A "Damp Squib" Verdict

Getty Images sued Stability AI, the creator of the image generator Stable Diffusion, alleging that the company infringed its copyright and trademark by scraping millions of its photos to train the AI model.

The Chilling Conclusion for Creators

In the end, many legal experts called the ruling a "damp squib" because it dodged the most important question: Is the act of training an AI model on unlicensed copyrighted material illegal?

- Jurisdictional Loophole: AI companies can simply train their models outside the UK (e.g., on foreign cloud servers) and largely avoid UK copyright law.

- The "Learning vs. Copying" Technicality: The ruling affirms the idea that AI models "learn" rather than "copy" in a traditional sense, making it incredibly difficult to sue a model developer under existing UK copyright law for the contents of the final model.

- The Need for New Law: The case strongly suggests that the existing copyright framework—written for an era of physical printing and CD piracy—is simply not fit for purpose in the age of generative AI. It places the ball firmly back in the government's court to create clearer legislation.

This ruling underscores my original concern: if even a well-resourced company like Getty Images struggles to enforce its rights, how can us as individual photographer expect to protect our own work?

Add comment

Comments